HISTORY77 - Fifteen Accidental Inventions that Changed the World

Introduction

I’ve been blogging lately about

inventions - some on purpose inventions, some accidental. This blog concludes the series of four

article with: Fifteen Accidental

Inventions that Changed the World. These

include: penicillin, X-rays, the blood

thinner warfarin, implantable cardiac pace makers, pap smear, quinine,

anesthesia, Viagra, Botox, smoke detectors, laminated safety glass, dynamite,

vulcanized rubber, stainless steel cutlery, and dry cleaning.

For each of these 15 inventions,

I will summarize the invention’s history and relate my (and/or Pat’s) personal

experiences with the invention.

My principal sources include “30

Life-Changing Inventions That Were Totally Accidental,” bestlifeonline.com; “7

Momentous Inventions Discovered by Accident,” history.com; “The Best Accidental

Inventions,” inventionland.com; “9 Successful Inventions Made by Accident,”

concordia.edu/blog; “15 Of the Coolest Accidental Inventions,”

science.howstuffworks.com; “10 Accidental Discoveries That Changed the World,”

rd.com; plus, numerous other online sources.

So here are my fifteen accidental

inventions that changed the world, in no particular order.

Penicillin

Penicillin was the first antibiotic developed to

treat bacterial infections. Before

penicillin was discovered and made into an antibiotic, having a simple scratch

could lead to an infection that could kill.

Using penicillin and other antibiotics has saved many thousands of

lives.

Note: Antibiotics are not effective against viruses such as the common cold or influenza. They are also not effective against fungi.

Bacterial infections can occur throughout the body, including

skin, teeth, ear, nose, throat, and lungs.

Common infectious diseases caused by

bacteria include strep throat; salmonella (in intestinal tract); tuberculosis;

whooping cough; syphilis, chlamydia, gonorrhea and other sexually transmitted

infections; urinary tract infections; E. coli (in intestines, can cause severe stomach cramps,

bloody diarrhea and vomiting); and clostridioides difficile (in large

intestine, causes

diarrhea and colitis, and inflammation of the colon).

There is good historical evidence that ancient civilizations

used a variety of naturally available treatments for infection, for example

herbs, honey and even animal feces. One

of the more successful treatments was the topical application of moldy bread,

with many references to its beneficial effects from ancient Egypt, China,

Serbia, Greece and Rome. This theme of

the benefit of molds continued over the years, with references by English

botanist John Parkinson in his book Theatrum Botanicum,

published in 1640.

In 1928, Dr. Alexander Fleming,

a Scottish

physician and bacteriologist, discovered penicillin and opened the world to antibiotics.

Returning

to St. Mary’s College in London from a two-week vacation, in 1928, Fleming realized that he had left cultures of

Staphylococcus aureus in a petri dish in his lab, which he meant to throw away

before he left. To his surprise, some of the cultures

had died. Further investigation led

Fleming to discover that a fungus, which had grown in the culture, had

destroyed the bacteria. The type of

fungus he found was a mold called Penicillium

notatum, a type of fungus that is similar to the mold that grows on

bread.

Fleming published his findings in the British Journal

of Experimental Pathology in 1929, but the report didn’t garner much

interest.

Then in 1938, Ernst Chain, a

biochemist working with pathologist Howard Florey at Oxford University, came

across Fleming’s paper while he was researching antibacterial compounds. Scientists in Florey’s lab started working

with penicillin, which they eventually injected into mice to test if it could

treat bacterial infections. Their

experiments were successful and they went on to test it in humans, where they

also saw positive results.

Penicillin was discovered as mold on a laboratory petri dish.

Penicillin and its variants are still widely used today for different bacterial infections, though many types of bacteria have developed resistance following extensive use.

I was born (1940) just as penicillin

became available as a medication. Ironically, this wonderful drug is the only

medication that I am allergic to (hives).

Pat had penicillin a few times in her younger years.

X-rays

In 1895, German physicist Wilhelm

Conrad Rontgen, was investigating electrical discharges from a vacuum tube in

his laboratory in Wurzburg, Germany. He

had wrapped the vacuum tube in black cardboard so that the visible light from

the tube would not interfere. He noticed

a mysterious faint green glow from a chemically-treated fluorescent screen

about three feet away. Rontgen realized

that some invisible rays coming from the tube were passing through the

cardboard to make the screen glow. He

found they could also pass through books and papers on his desk. Confused and intrigued, he named the new rays causing this

glow X-rays, due

to their unknown origin.

Rontgen threw himself into

investigating these unknown rays systematically. He discovered their medical use when he made a

picture of his wife's hand on a photographic plate formed due to X-rays. This was the first photograph of a human body

part using X-rays. Two months after his initial discovery, he published his first

paper.

The first “medical” X-ray, taken of the hand of Wilhelm Rontgen’s wife, on 22 December 1895.

The discovery of X-rays generated

significant interest. Rontgen’s

biographer Otto Glasser estimated that, in 1896 alone, as many as 49 essays and

1044 articles about the new rays were published. This was probably a

conservative estimate, if one considers that nearly every newspaper around the

world extensively reported about the new discovery, with a magazine such

as Science dedicating as many as 23 articles to it in that

year alone.

The first use of X-rays under clinical conditions was

by John Hall-Edwards in Birmingham, England on January 11, 1896,

when he radiographed a needle stuck in the hand of an associate. On February 14, 1896, Hall-Edwards was also

the first to use X-rays in a surgical operation.

Technology improvements in X-ray

generation and assessments for medical imaging have led to improvements in the

diagnosis and treatment of numerous medical conditions in children and adults.

Today, there are three main types of

medical imaging X-ray applications.

Radiography - a

single image is recorded for later evaluation. Mammography is a special type of radiography

to image the internal structures of breasts.

Fluoroscopy - a

continuous X-ray image is displayed on a monitor, allowing for real-time

monitoring of a procedure or passage of a contrast agent ("dye")

through the body.

Computed tomography (CT) aka computed axial tomography (CAT) - many X-ray images are recorded as the detector moves around the patient's body. A computer reconstructs all the individual images into cross-sectional images or "slices" of internal organs and tissues.

As many of us have, over my

lifetime, I have had several dental X-rays, diagnostic chest X-rays, and X-rays

to set and monitor broken bones. Pat also

has had dental and chest X-rays, as well as numerous mammograms, bone-density

scans, and lately diagnostic CT scans.

All in all, X-rays have been vital to our prolonged active lives.

Blood Thinner Warfarin

Warfarin, a common blood thinner, was

discovered not in a lab but in a field, where livestock were dying from a

mysterious disease.

In the 1920s, cattle and sheep that

grazed on moldy sweet clover hay began to suffer from internal bleeding. Many previously healthy animals also bled to

death after simple veterinary procedures.

A Canadian veterinarian, Frank Schofield, determined that the moldy hay contained

an anticoagulant that was preventing their blood from clotting. In 1940, scientists at the University of

Wisconsin, led by biochemist Karl Link, isolated the anticoagulant compound in

the moldy hay. A particularly powerful

derivative of the compound was patented as warfarin, named after the Wisconsin

Alumni Research Foundation that funded its development.

But before it was used medicinally in

humans, warfarin was used as rat poison.

In 1948, it was approved for use as a rodenticide. It wasn’t until the mid-1950s that warfarin

entered clinical use. It was formally

approved as a medication to treat blood clots in humans by the U.S. Food and

Drug Administration in 1954.

Among its early patients was President Dwight D. Eisenhower, whose

1955 heart attack was treated with warfarin.

Warfarin is usually taken by mouth.

While the drug is described as a "blood thinner,” it

does not reduce viscosity but inhibits coagulation, and is commonly used to

prevent blood clots in the circulatory system such

as deep vein thrombosis and pulmonary embolism, and to protect

against stroke in people who have atrial

fibrillation, valvular heart disease, or artificial heart

valves.

It is usually taken by mouth, but may also be

administered intravenously.

Excessive bleeding is the most common side effect of

warfarin.

Today, about 2 million people in the United States take

warfarin, available as Coumadin® or Jantoven®.

Neither Pat nor I have ever had warfarin. We both take other anticoagulants today, so

we probably owe a debt to the discovery and success of warfarin.

Implantable Cardiac Pace Maker

An implantable cardiac pacemaker

is a battery-powered device that is placed under a person's skin that helps the

heart perform normally. The device sends

electrical pulses to keep the heart beating at a regular rhythm, preventing potentially

life-threatening complications for irregular heartbeats in many

patients.

In 1956, adjunct professor of engineering at the University of Buffalo, Wilson Greatbatch, was working on a device to record the rhythm of

a human heartbeat, but he used the wrong-sized resistor in the circuit. The device created intermittent

electrical impulses that closely mimicked the sound of a human heartbeat. Greatbatch discovered that from his invention

he could run electrodes directly to the muscle tissue of the heart, keeping a

patient's heart on track.

While hospitals already had

pacemaker machines, they were large, painful, and immobile. Greatbatch realized that a pacemaker

implanted in the human body would allow patients who needed pacemakers

not have to remain at the hospital, and they wouldn't have to use the painful

machines.

In 1958, Greatbatch collaborated with

Dr. William Chardack of the Buffalo Veterans Administration Medical Center and

Dr. Ander Gage to

implant an electrode in a dog attached to a pulse generator. They worked for the next two years to refine

their design of a unit that could be implanted in the human body and would

maintain the same pulse rhythm for long periods of time.

In 1960, they implanted a pacemaker

into a 77-year-old man, who lived for 10 months after the surgery. In that same year, they implanted pacemakers

into nine other patients, several of whom lived for more than 20 years after

the implant.

Greatbatch and Chardack’s design was

the first implanted pacemaker to be commercially produced.

Modern pacemakers are externally programmable and allow a

cardiologist to select the optimal pacing modes for individual patients. Most pacemakers are “on demand,” in which the

stimulation of the heart is based on the dynamic demand of the circulatory

system. Others send out a fixed rate of

impulses.

Today, more than 3 million people worldwide have implanted cardiac

pacemakers.

Wilson Greatbatch holding the first implantable pacemaker.

Thankfully, neither Pat or I have

needed a cardiac pacemaker. However, my

brother had one “installed” last year, and is doing fine.

Pap Smear

The American Cancer Society's

estimates for cervical cancer in the United States for 2023 are:

a.

About 13,960 new cases of invasive cervical cancer will

be diagnosed.

b.

About 4,310 women will die from cervical cancer.

The Pap smear is a medical screening test designed to

identify abnormal, potentially precancerous cells within the cervix (opening

of the uterus or womb) as well as cells that have progressed to early

stages of cervical cancer. In the test

procedure, a small brush is used to gently remove cells from the surface of the

cervix and the area around it so they can be checked under a microscope for

cervical cancer or cell changes that may lead to cervical cancer. A Pap smear may also help find other

conditions, such as infections or inflammation.

The test was invented by and named

after the Greek doctor Georgios Papanikolaou. In 1923, while observing a slide of cells taken from a

woman's uterus, Papanicolaou came up with the idea for

the Pap smear to test for cancer. Originally, Papaniculaou's intention was

simply to observe cellular changes during a woman's menstrual cycle, but during

his study, he discovered that one of his patients had uterine cancer - and that

her cancer cells could easily be viewed under a microscope.

The Pap test was finally recognized

only after an article in the American Journal of Obstetrics and

Gynecology in 1941 by Papanikolaou and Herbert F. Traut, an American

gynecologist. A monograph entitled Diagnosis

of Uterine Cancer by the Vaginal Smear that they published contained

drawings of the various cells seen in patients with no disease, inflammatory

conditions, and preclinical and clinical carcinoma. Both Papanikolaou and his wife, Andromachi

Papanikolaou, dedicated the rest of their lives to teaching the technique to

other physicians and laboratory personnel.

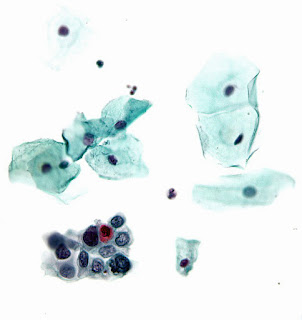

Pap smear showing abnormal squamous cells.

Over the years, the Pap smear has saved many lives. Medical guidance today is that women aged 21

to 29 should have a Pap test about every three years. Those aged

30 to 65 should have a Pap test every three to five years.

Pat has had regular pap smears over her lifetime, thankfully

all negative.

Quinine

Malaria is a mosquito-borne infectious

disease that affects humans and other animals. Malaria causes symptoms that typically

include fever, tiredness, vomiting, and headaches. In severe cases, it can cause jaundice, seizures, coma,

or death. Over millennia, its victims have

included Neolithic dwellers, early Chinese and Greeks, and princes and paupers.

In the 20th century alone,

malaria claimed between 150 million and 300 million lives, accounting for

2 - 5% of all deaths.

The discovery of quinine is

considered the most serendipitous medical discovery of the 17th

century and malaria treatment with quinine marked the first successful use of a

chemical compound to treat an infectious disease.

Quinine, as a component of the bark

of the cinchona tree, was used to treat malaria from as early as the 1600s,

when it was referred to as the "Jesuits' bark," "cardinal's

bark," or "sacred bark."

These names stem from its use in 1630 by Jesuit missionaries in South

America, though a legend suggests earlier use by the native population. According to this legend, an Indian with a

high fever was lost in an Andean jungle.

Thirsty, he drank from a pool of stagnant water and found that it tasted

bitter. Realizing that the water had

been contaminated by the surrounding cinchona trees, he thought he was

poisoned. Surprisingly, his fever soon

abated, and he shared this accidental discovery with fellow villagers, who

thereafter used extracts from the cinchona bark to treat fever.

Before 1820, the bark of the cinchona

tree was first dried, ground to a fine powder, and then mixed into a liquid

(commonly wine) before being ingested.

In 1820, quinine was extracted from the bark, isolated and named by

Frenchmen pharmacists Pierre Joseph Pelletier and Joseph Caventou. Purified

quinine then replaced the bark as the standard treatment for malaria.

Drawing showing the source of the anti-malaria drug quinine.

Quinine remained the mainstay of

malaria treatment until the 1920s, when more effective synthetic anti-malaria

drugs became available.

As of 2006, quinine is no longer

recommended by the World Health Organization as a first-line

treatment for malaria, because there are other substances that are equally

effective with fewer side effects. Quinine

is still used to treat lupus. While quinine is not approved by the FDA for the treatment of arthritis, some people use

it as a natural remedy for pain relief.

Quinine is thought to have anti-inflammatory properties, which may help

reduce swelling and pain in the joints.

Thankfully, neither Pat nor I have

contracted malaria and therefore never required quinine. Similarly, we have not needed to take

antimalarial drugs on our travels.

Anesthesia

Early

anesthesia (probably herbal remedies) can be traced back to ancient times

(Babylonians, Greeks, Chinese, and Incas), but one of the first European

accounts occurred in the 1200s when Theodoric of Lucca, an Italian physician

and bishop, used sponges soaked with opium and mandragora (from the

mandrake plant) for surgical pain relief. Hashish and Indian hemp were also commonly

used as painkillers.

Up

until the mid-1800s, however, surgeons could not offer patients much

more than opium, alcohol, or a bullet to bite on to deal with the agonizing

pain of surgery.

The

most famous anesthetic, ether, may have been synthesized as early as the 8th

century, but it took many centuries for its anesthetic importance to be

appreciated, even though the 16th century physician and polymath Paracelsus noted

that chickens made to breathe it not only fell asleep but also felt no

pain. By the early 19th

century, ether was being used by humans, but only as a recreational drug.

Meanwhile,

in 1772, English scientist Joseph

Priestley discovered

the gas nitrous oxide. Initially,

people thought this gas to be lethal, even in small doses, like some

other nitrogen oxides. However, in

1799, British chemist and inventor Humphry Davy decided to find out

by experimenting on himself. To his astonishment he found that nitrous oxide

made him laugh, so he nicknamed it "laughing gas.” In 1800 Davy wrote

about the potential anesthetic properties of nitrous oxide in relieving pain

during surgery, but nobody at that time pursued the matter any further.

American

surgeon and pharmacist Crawford Long is known as the inventor of modern

anesthetics in the West. Long

noticed that his friends felt no pain when they injured themselves while

staggering around under the influence of ether.

He immediately thought of its potential in surgery. Conveniently, a participant in one of those

"ether frolics,” a student named James Venable, had two small tumors he

wanted excised. But fearing the pain of

surgery, Venable kept putting the operation off. Hence, Long suggested that he have his

operation while under the influence of ether.

Venable agreed, and on March 30, 1842 he underwent a painless

operation. However, Long did not

announce his discovery until 1849.

American

dentist Horace

Wells conducted the first public demonstration of an inhalational

anesthetic at the Massachusetts General

Hospital in Boston in

1845. However, the nitrous

oxide was improperly administered and the patient cried out in pain.

On

October 16, 1846, another Boston dentist, William Thomas Green Morton, was invited to Massachusetts to demonstrate his

new technique for painless surgery using ether as the anesthetic. After dentist Morton induced the anesthesia, surgeon John Collin

Warren removed a tumor from the neck of Edward Gilbert Abbott. This occurred in the surgical amphitheater

now called the Ether Dome. The

previously skeptical surgeon Warren was impressed and stated, "Gentlemen,

this is no humbug." This

groundbreaking event was the first public demonstration of the use of ether as an anesthetic.

In a

letter to Morton shortly thereafter, physician and writer Oliver Wendell

Holmes, Sr. proposed naming the state produced "anesthesia," and

the procedure an "anesthetic.” News

of the successful anesthetic spread quickly by late 1846 - and the use of

anesthesia soon spread around the world for both dental and medical

purposes.

Anesthetic being administered today prior to an operation.

Today, many types of anesthesia can be administered to keep a patent

comfortable and pain-free during surgery, medical procedures, or tests.

General

anesthesia is used for major operation, such

as a knee replacement or open-heart surgery, and causes the patient to lose

consciousness.

IV/Monitored

sedation is often used for minimally

invasive procedures like colonoscopies.

The level of sedation ranges from minimal - drowsy but able to talk - to

deep unconsciousness.

Regional

anesthesia is often used during childbirth

and surgeries of the arm, leg, or abdomen.

It numbs a large part of the body, but the patient remains aware.

Local

anesthesia is for procedures such as getting

stitches or having a mole removed. It

numbs a small area, and the patient is alert and awake.

Over

our lifetimes, both Pat and I have had ample opportunity to experience

anesthesia of all types. Thank you so

much to the inventors!

Viagra

One of the most prescribed drugs in

the world, Sildenafil,

sold under the brand name Viagra, was originally developed

to help treat high blood pressure and angina, a heart condition that constricts

the vessels that supply the heart with blood.

In 1989, sildenafil was synthesized by a group of

pharmaceutical chemists wording at Pfizer's Sandwich, Kent, research

facility in England. Phase I clinical trials showed that the

drug had little effect on angina, but it could induce increased

frequency and potency of erections in male participants. While at the time this may have been a disappointment

to those who developed it, their accidental invention resulted in a gold mine

for Pfizer.

Pfizer decided to market it for erectile dysfunction, rather

than for angina; this decision became an often-cited example of drug

repositioning. The drug

was patented in 1996, and approved for use in erectile dysfunction by

the FDA on March 27, 1998, becoming the first oral treatment approved to treat

erectile dysfunction in the United States, and offered for sale later that

year. It soon became a great success; annual sales of Viagra peaked in

2008 at $1.934 billion.

Viagra, the familiar little blue pill for erectile disfunction.

Teva Pharmaceuticals was the first company to make generic

Viagra. And ever since it originally

launched in December 2017, more manufacturers have come to the table.

In 2020, Viagra was the 183rd most commonly

prescribed medication in the United States, with more than 2 million

prescriptions. It is taken by mouth or

by injection into a vein. Onset is typically

within twenty minutes and lasts for about two hours.

Let’s just say that I have some familiarity with this drug.

Botox

Botox is a drug made from a toxin produced by the

bacterium Clostridium botulinum. It's the same toxin

that causes a life-threatening type of food poisoning called botulism. Doctors use it in small doses to treat health

problems, including: temporary smoothing of facial wrinkles and improving your

appearance.

Botulism is a rare but very serious

illness that transmits through food, contact with contaminated soil, or through

an open wound. Without early treatment,

botulism can lead to paralysis, breathing difficulties, and death. Certain food sources, such as home-canned

foods, provide a potent breeding ground.

About 145 cases of botulism are reported every year in the United States;

about 3 - 5% of those with botulism poisoning die.

The discovery of the botulism toxin

dates all the way back to 1820s Germany, when a scientist named Dr. Justinus

Kerner was investigating the death of several people who died as a result of

food-borne botulism. More specifically,

Dr. Kerner was studying the neurological effects of the poison - muscle

weakness, difficulty swallowing, drooping eyelids and in some cases, paralysis

and respiratory failure. His research

not only helped understand prevention and treatment, but also planted the seed

for using the toxin therapeutically, as we do today.

By the 1940s, botulinum toxin was

considered to be the most dangerous substance in the world, and the U.S. was

researching it for use as a biological weapon during World War II.

In the 1950s and 60s, scientists were

able to purify botulinum toxin, and research began into using the purified

toxin for therapeutic purposes. It was

used as early as the 1960s to treat strabismus, or crossed eyes. By the late 1980s, it was FDA-approved and

used regularly to treat crossed eyes and eyelid spasms.

Its cosmetic advantages were

discovered accidentally in 1987 by ophthalmologist Dr. Jean Carruthers who

noticed that when patients were treated for eyelid spasms using the toxin,

there was a bonus side effect of reduced forehead lines. She published a paper on the subject in 1992,

and once word got out, Botox (as it was now called) became the hottest ticket

in town.

Botox shots block certain chemical signals from

nerves that cause muscles to contract. The most

common use of these injections is to relax the facial muscles that cause frown

lines and other facial wrinkles.

Botox was FDA approved for cosmetic

use in 2002, and since then, has consistently remained the number one

nonsurgical procedure in the U.S., with over one million people utilizing it

for its rejuvenating anti-aging effects each year. Botox has also been FDA approved to treat

several medical issues, including hyperhidrosis (excessive sweating), chronic

migraines, and overactive bladder.

Patient about to receive a shot of Botox.

Neither Pat nor I have ever used

Botox, having long ago accepted our “life experience” wrinkles.

Smoke Detector

Smoke detectors are so commonplace in homes and businesses

that they’re easy to overlook. But their

invention has saved millions of lives, and having a working smoke detector in

the home decreases the risk of dying in a fire by more than half.

In the 1930s, Swiss scientist Walter

Jaeger accidentally invented the smoke detector when he was trying to invent a

sensor to detect poison gas. His sensor

device was supposed to move a meter when the poison gas entered it and altered

the electrical current inside. But when

he tried to get his device to work with the poison gas, nothing happened. So, he lit a cigarette and pondered what to do

next… and the detector went off (moving the meter) when it detected his

cigarette smoke. The first modern smoke

detector was born.

Jaeger’s smoke detector employed a small, harmless amount of

radioactive material to ionize the air in a sensing chamber; the presence of

smoke affected the flow of the ions between a pair of electrodes, which

produced a weak signal to trigger an alarm.

In 1939, another Swiss scientist,

Ernst Meili, improved upon Jaeger’s invention.

He invented a device to amplify the weak signal made by the smoke detector’s

mechanism sufficient to confidently trigger the alarm. This, in turn, made the ionization chamber

smoke detector more sensitive and more effective.

In 1963, the Atomic Energy Commission

(AEC) granted the first license to distribute smoke detectors using radioactive

material. These detectors were mostly

used in public buildings including warehouses and factories during the 1960s. In 1969, the AEC first allowed homeowners to

use smoke detectors - without the need for a license. By the 1970s, ionization chamber smoke

detectors in homes became commonplace.

Today, besides ionization chamber

smoke detectors, there are photoelectric smoke detectors that use a beam of

light inside the device to detect smoke. When smoke enters the detector, it disrupts

the beam of light and, thus, triggers the alarm.

Nine in 10 homes have smoke detectors today. Between 80 and 90% of them are ionization

chamber smoke detectors.

Smoke detectors are usually housed in plastic

enclosures, typically shaped like a disk about six inches in diameter and one

inch thick. Smoke detectors operate on a

9-volt battery.

Household smoke detectors are typically mounted on the ceiling.

Household smoke detectors generally

issue an audible or visual alarm from the detector itself or several

detectors if there are multiple devices interlinked. Household smoke detectors range from

individual battery-powered units to several interlinked units with battery backup.

With interlinked units, if any unit

detects smoke, alarms will trigger at all of the units. This happens even if

household power has gone out.

Smoke detectors in large commercial

and industrial buildings are usually connected to a central fire alarm

system.

In our current home, built in 1995,

there are four ceiling-mounted, interlinked ionization chamber smoke alarms,

that operate on the home’s 120-volt power source with a 9-volt battery

backup. Thankfully, we have had no fire

or smoke issues, but have suffered the inconvenience several times of having to

replace a battery in a “chirping” detector in the middle of the night.

Laminated Safety Glass

Safety

glass is glass with additional features that make it less likely

to break, or less likely to pose a threat when broken. Common designs include toughened

glass (also known as tempered glass), wire mesh glass, and laminated

glass.

Toughened glass was invented in 1874

by Francois Barthelemy Alfred Royer de la Bastie. Tempered glass is often used today in car

windows, shower doors, glass tables, and other installations where increased

safety standards are necessary. While the

manufacturing process does make tempered glass more resistant to force, it is

not unbreakable. If the glass is broken, it shatters into

pebbles reducing the risk of serious injury.

Wire mesh glass was invented in 1892

by Frank Shuman. The wire mesh acts as a reinforcement. If the glass breaks due to impact, the pieces

of glass are held by wire reinforcement in position. Wired Glass is used today in partitions and

windows of public buildings, schools, hotels, and institutions. Usually, wired glass is used in windows of

routes to the fire escape, this helps in increasing the time required for

evacuating people in a situation of fire or other emergencies.

Laminated glass was invented in 1903

by the French chemist

Edward Benedictus, inspired by a laboratory accident: a glass flask coated with the

plastic cellulose nitrate was accidentally dropped. When Benedictus looked down, he noticed that rather than

breaking into a million little pieces, the glassware had actually just cracked

slightly while maintaining its shape.

After looking into it a bit further, the scientist learned that what had

kept the glass together was the cellulose nitrate coating the inside of the

glass - and thus, the idea of laminated safety glass was created.

In 1909 Benedictus filed a patent,

after hearing about a car accident where two women were severely injured by

glass debris. In 1911, he formed

the Triplex Glass Company, which fabricated a

glass-plastic composite windshield to reduce injuries in car accidents. Production of Triplex glass was slow and

painstaking, so it was expensive; it was not immediately widely adopted

by automobile manufacturers. But

laminated glass was widely used in the eyepieces of gas

masks during World War I.

In 1912, the laminated safety glass process

was licensed to the English Triplex Safety Glass Company. Subsequently, in the United States,

both Libbey-Owens-Ford and Du Pont, with Pittsburg Plate Glass, produced Triplex glass.

In 1927, Canadian chemists Howard W.

Matheson and Frederick W. Skirrow invented the plastic polyvinyl butyral (PVB). By 1936, United States companies had

discovered that laminated safety glass consisting of a layer of PVB between two

layers of glass would not discolor and was not easily penetrated during

accidents. The interlayer kept the

layers of glass bonded even when broken, and its toughening prevented the glass

from breaking up into large sharp pieces. This produced a characteristic "spider

web" cracking pattern (radial and concentric cracks) when the impact was

not enough to completely pierce the glass.

Within five years, the new laminated safety

glass had substantially replaced its predecessor.

Laminated safety glass consists of two pieces of glass bonded with a vinyl interlayer, usually PVB.

Today, besides automobile windshields,

laminated glass is normally used when there is a possibility of human impact or

where the glass could fall if shattered. Store windows, doors, and skylights for example, are

typically made of laminated glass.

Buildings that have glass floors also use laminated glass to prevent

breakage from all the foot traffic. The

material is even be made bullet proof, so it may be found in police cars and

stations. In geographical areas

requiring hurricane-resistant construction, laminated glass is often used

in exterior storefronts, weather protection facades, and windows.

The PVB interlayer also gives

laminated glass a much higher sound insulation rating, and also blocks

most of the incoming UV radiation (88% in window glass and 97.4% in

windscreen glass).

Pat and I have both experienced car

windshield damage from flying objects (rocks?).

Sometimes the damaged area was small enough to be repaired; other times

the entire windshield had to be replaced.

But in all cases, the laminated safety glass did its job!

Dynamite

Though the explosive substance nitroglycerin was invented

by Ascanio

Sobrero, it was Alfred Nobel who used it to make dynamite.

Nitroglycerin was invented in 1847 by

Italian chemist Ascanio Sobrero, who combined glycerol with nitric and sulfuric

acids to produce an explosive compound.

It was far more powerful than gunpowder, and more volatile. Sobrero was opposed to its use, but his lab

mate, Swedish chemist and engineer Alfred Nobel, saw potential for

creating profitable explosives and weapons.

Nitroglycerin became a commonly used

explosive, but it was quite unstable and prone to spontaneous explosions,

making it difficult for scientists to experiment with.

In the early 1860s, Alfred Nobel,

along with his father and brother Emil, experimented with various

combinations of nitroglycerin and black powder.

Nobel came up with a solution of how to safely detonate nitroglycerin by

inventing the detonator, or blasting cap, that allowed a controlled

explosion set off from a distance using a fuse. In 1863, Nobel

performed his first successful detonation of pure nitroglycerin, using a

blasting cap.

On September 3, 1864, while experimenting with nitroglycerin,

Emil and several others were killed in an explosion. After this, Alfred continued work in a more

isolated area.

Despite the invention of the blasting cap, the instability of

nitroglycerin rendered it useless as a commercial explosive. One day

he was working with nitroglycerin when a vial accidentally fell to the floor

and smashed. But it didn’t explode, due

to the contact it had made with a pile of sawdust, which helped to stabilize

it. Nobel later perfected the mixture by

using kieselguhr, a form of silica, as a stabilizing substance.

Nobel patented his new explosive in 1867. One thousand times more powerful than

black powder, dynamite rapidly gained wide-scale use as a more robust alternative to

the traditional black powder explosives. It allowed the use of nitroglycerine's

favorable explosive properties while greatly reducing its risk of accidental

detonation.

Nobel tightly controlled the patents,

and unlicensed duplicating companies were quickly shut down. He originally sold dynamite as "Nobel's

Blasting Powder" but decided to change the name to dynamite, from

the Ancient Greek word dýnamis, meaning "power.”

Laminated safety glass consists of two pieces of glass bonded with a vinyl interlayer, usually PVB.

In

addition to its limited use as a weapon, dynamite was used initially -

and still is today -

in the construction, mining, quarrying, and demolition industries. Dynamite has expedited the

building of roads, tunnels, canals, and other construction projects worldwide.

Today Alfred Nobel’s name is well known,

but more for prizes of achievements and peace than for explosives. The Nobel Prizes are five separate annual prizes that,

according to Alfred Nobel's will of 1895, are awarded to "those who,

during the preceding year, have conferred the greatest benefit to

humankind" in five categories: physics, chemistry, physiology or medicine,

literature, and peace.

Neither Pat nor I have ever had direct

connection to dynamite, but we certainly have benefited from all its

applications to construction projects.

Vulcanized Rubber

Vulcanization is a range of processes for hardening rubber.

The Aztec, Olmec,

and Maya of Mesoamerica are known to have made rubber using natural latex - a

milky, sap-like fluid found in some plants. These ancient rubber makers mixed latex with

juice from morning glory vines, which contains a chemical that makes the

solidified latex less brittle. Mixing up

rubber using different proportions of the two ingredients, led to rubber

products with different properties. They

used rubber to make balls (for ceremonial games in stonewalled courts), sandal

soles, rubber bands, and waterproof containers.

Skip to the 19th century: The problem with natural rubber is that it is

not useful in its unaltered state. In

hot temperatures, rubber melts and becomes sticky. In cold temperatures, it gets rigid and

brittle.

Early rubber tube tires would grow

sticky on a hot road. Debris would get

stuck in them and eventually the tires would burst.

In the 1830s, American inventor Charles

Goodyear, was working to improve those tube tires. He tried heating up rubber in order to mix

other chemicals with it. This seemed to

harden and improve the rubber, though this was due to the heating itself and

not the chemicals used. Not realizing

this, he repeatedly ran into setbacks when his announced hardening formulas did

not work consistently. One day in 1839,

when trying to mix rubber with sulfur, Goodyear accidentally dropped the

mixture into a hot frying pan. To his

astonishment, instead of melting further or vaporizing, the

rubber remained firm and, as he increased the heat, the rubber became harder. Over the next five years, Goodyear worked out

a consistent system for this hardening and discovered vulcanization: the treatment of rubber with heat, and

originally sulfur, to bring it to a molecular state which gives the rubber

better strength, elasticity, and durability.

He patented the process in 1844.

His discovery started decades of successful rubber manufacturing,

as rubber was adopted to multiple applications, including automobile tires and

footwear.

Twenty-four years after Goodyear’s death, in 1898,

the Goodyear Tire and Rubber Company was named after him.

1951 advertisement for Goodyear vulcanized automobile tires.

In 1912, George Oenslager, an American chemist, invented an

advanced method of vulcanizing rubber that is still used today. He discovered a derivative of aniline (a

colorless oily liquid present in coal tar) that accelerated the vulcanization of rubber with

sulfur, which greatly increased the cost effectiveness of rubber.

These days

natural rubber is obtained from rubber trees that require a hot, damp climate. More than 90% of the world's natural rubber

supply comes from mainland Southeast Asia.

Today, more than one billion automobile

tires are manufactured worldwide on an annual basis. Vulcanized rubber is also used to produce

rubber hoses, shoe soles, insulation, vibration dampers, erasers, hockey pucks,

shock absorbers, children’s toys, conveyor belts, rubber-lined tanks, bowling

balls, and more. Most

rubber products are vulcanized as this massively improves their lifespan,

function, and strength.

Together with almost everyone else in

the world today, Pat and I are, and have been, regular users of vulcanized

rubber products.

Stainless Steel Cutlery

Stainless steel is

an alloy of iron that is resistant

to rusting and corrosion. It contains at least 11% chromium and

may contain elements such as nickel, carbon, and

other nonmetals to obtain specific desired properties.

Over the course of the 19th

century many experiments were made with iron and steel to develop new alloys

and investigate their properties. By the

early 1900s, metallurgists in Germany, France and the United States were

getting close to developing what we now know as stainless steel.

In 1908, English metallurgist Harry

Brearley, at Sheffield’s Steelworks, was given a task by a rifle manufacturer

who wanted to prolong the life his gun barrels which were eroding away too

quickly from excessive wear on the internal surface of the barrel.

Brearley set out to create erosion -

resistant steel by developing steel alloys containing chromium, which was

known to raise the material's melting point, as compared to the

standard carbon steels. He

made several variations of alloys, ranging from 6% to 15% chromium, with

different measures of carbon. On August

13, 1913, Brearley created steel with 12.8% chromium and 0.24% carbon, which is

thought to be the first ever stainless steel - and proved to be the solution to

the gun barrel problem. The discovery

was announced two years later in a January 1915 newspaper article in The

New York Times.

In his experiments, Brearley etched

his steel samples with nitric acid for identification to examine them under a

microscope. He found that his new steel

resisted these chemical attacks, and so he tested the sample with other agents,

including lemon juice and vinegar.

Brearley was astounded to find that his alloys were still highly

resistant, and immediately recognized the potential of his new steels for food-grade

cutlery, saucepans, and other kitchen equipment.

Up to that time, carbon-steel knives

were prone to unhygienic rusting if they were not frequently polished,

and only expensive sterling silver or electroplated nickel

silver cutlery was generally available to avoid such problems. He foresaw the elimination of nightly washing, polishing and

putting away of the silverware that was then required.

Stainless steel cutlery is widely used today.

Brearley struggled to win the support

of his employers for this accidental new product application, so he teamed up

with local cutler R. F. Mosley. Brearley

was going to call his steel “Rustless Steel” but Ernest Stuart, Cutlery Manager

at Mosley's Portland Works, called it “Stainless Steel” after testing the

material with vinegar, and the name stuck.

Virtually all research projects into

the further development of stainless steels were interrupted by World War I

(1914-1918), but efforts were renewed in the 1920s. Brearley’s successor, Dr. W. H. Hatfield,

is credited with the development, in 1924, of an alloy of stainless steel,

which in addition to chromium, included nickel - and

is still used widely today.

Harry Brearley’s invention brought

affordable cutlery to the masses.

Major technological advances in the

1950s and 1960s allowed the production of large tonnages of stainless steel at

an affordable cost. In addition to

cutlery, cookware, kitchen sinks, and other culinary uses, stainless steel is

used today in surgical tools and medical equipment, surgical implants,

temporary dental crowns, buildings, bridges, and transportation applications.

Kitchen-wise, Pat and I have lived for

a long time in the stainless-steel generation with all the products

mentioned.

Dry Cleaning

Dry cleaning is any cleaning process

for clothing and textiles using a solvent other

than water.

The earliest records of professional

dry cleaning go all the way back to the Ancient Romans. For instance, dry

cleaning shops were discovered in the ruins of Pompeii, a Roman city buried by

the eruption of Mount Vesuvius in 79 AD. Those cleaners, known as fullers, used a type

of clay known as fuller’s earth along with lye and ammonia (derived from urine)

in order to remove stains such as dirt and sweat from clothing. That process proved pretty effective for any

fabric too delicate for normal washing or stains that refused to budge.

As for more modern methods, the

biggest revolution in dry cleaning came in the early 19th

century. Traditionally, textile maker Jean

Baptiste Jolly of France is generally named the father of modern dry cleaning. The story goes that in 1825, a careless maid accidentally

knocked over a lamp and spilled turpentine on a dirty tablecloth. Jolly noticed that once the turpentine dried,

the stains that had marred the fabric were gone. He conducted an experiment where he bathed the

entire tablecloth in a bathtub filled with turpentine and found that it came

clean once it dried. Jolly used this

method when he opened the often claimed first modern dry-cleaning shop,

“Teinturerier Jolly Belin,” in Paris in 1845.

However, a patent for a process called

“dry scouring” had been filed with the U.S. Patent Office in 1821, four years

before Jolly’s discovery. A man by the name of Thomas Jennings was a clothier

and a tailor in New York City, and the first African American to be granted a

patent in the United States.

While working as a clothier, he, like

so many others in his profession, was familiar with the age-old customer

complaint that they could not clean their more delicate clothes once they’d

become stained because the fabric wouldn’t hold up to traditional washing and

scrubbing. Jennings, thus, began

experimenting with different cleaning solutions and processes before

discovering the process he named “dry scouring.” His method was a hit and not only made him extremely

wealthy, but allowed him to buy his wife and children out of slavery, as well

as fund numerous abolitionist efforts.

The exact method Jennings used has

been lost to history, as his patent was destroyed in an 1836 fire. After Jennings, other dry cleaners during the

19th century used things like turpentine, benzene,

kerosene, gasoline, and petrol as solvents in the process of dry-cleaning

clothes. These solvents made dry

cleaning a dangerous business. Turpentine caused clothes to smell even after

being cleaned, and benzene could be toxic to dry cleaners or customers if left

on the clothes. But all of these

solvents posed the bigger problem of being highly flammable. The danger of clothes and even the building

catching fire was so great that most cities refused to allow dry cleaning to

occur in the business districts.

This led to dry cleaners searching for

a safer alternative. Chlorinated solvents gained popularity in the early 20th century, after World War I. They removed

stains just as well as petroleum-based cleaners without the risk of causing the

clothes or factories to catch fire. That

also meant dry cleaners could move their cleaning facilities back into cities

and eliminated the need to transport clothes back and forth between two locations.

By the mid-1930s, the dry-cleaning

industry had started to use tetrachloroethylene as the solvent. It has excellent cleaning power and is

nonflammable and compatible with most garments.

Typical dry cleaning store rack of garments.

Since the 1990s, there has been

concern that tetrachloroethylene may be a toxic chemical that causes

cancer. Studies are ongoing, but the

use of the chemical in dry cleaning is still common.

Years ago, when I worked for a living

and wore suits every day, I was a regular user of dry-cleaning services. But in retirement, I have not had to use dry

cleaning. Pat has long been concerned

about the chemicals used in dry cleaning, and therefore has avoided the service

Conclusions

This concludes my blog on fifteen

accidental inventions that changed the World.

This also concludes my four-part series on inventions. Just reminding the reader that the previous

three covered: the top 10 inventions that changed the world (04/18/2023),

accidental inventions that are common household items (06/05/2023), and ten

more accidental inventions that are common household items (06/14/2023). I realize that I omitted a few inventions in

each category, and may have to revisit them at another time.

Pat and I

really enjoyed talking about how all these inventions affected us!

Comments

Post a Comment